Qualys is an awesome product, but have you tried fetching "old" vulnerability data for reporting purposes? Not possible, the way that solution scaled, and new modules built up around its core, the way "firstFound, lastFound" and some other params constantly change, its just not possible to have insight in vulnerability data - retrospectively.

Default scheduled reports? - Have you looked at those, they gave me a 80-90s matrix vibe, not that I don't like matrix, its just that upper management most likely does not.

Here is a 'simple' automation solution that will allow you to have your vulnerability data always present and searchable in trend manner inside power bi, as well as in pure csv so you can scale your charts however you need.

I am going to store results on mounted share folder, so you can follow this blog to setup mounted share, env vars, fstab... and return for the rest.

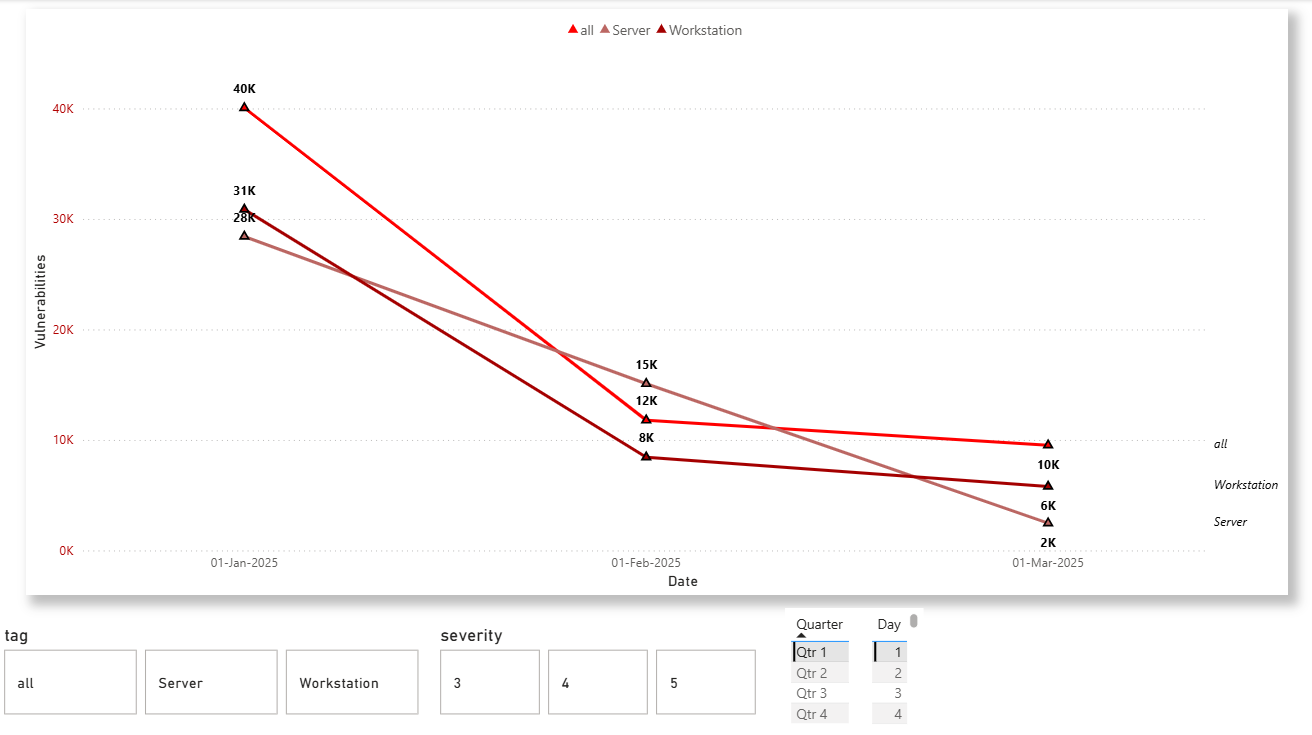

You are going to end up with this.

You can toggle on/off filters, showing only servers or workstations, specific severities, quarters, days, dates, date ranges, etc...

Cool ha? Ye, upper management is going to love your charts, and you gonna hate your life for having to maintain and scale this solution - I've warned you, proceed with caution.

Code review

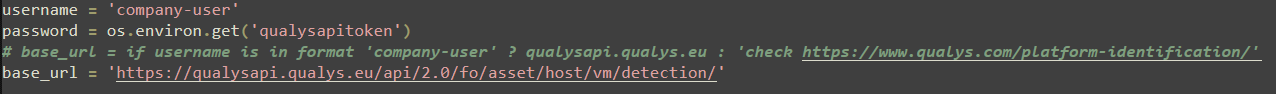

Some globals first. Your base_url may vary, and a way to know which one you want to hit up for api data, you can read the docs here.

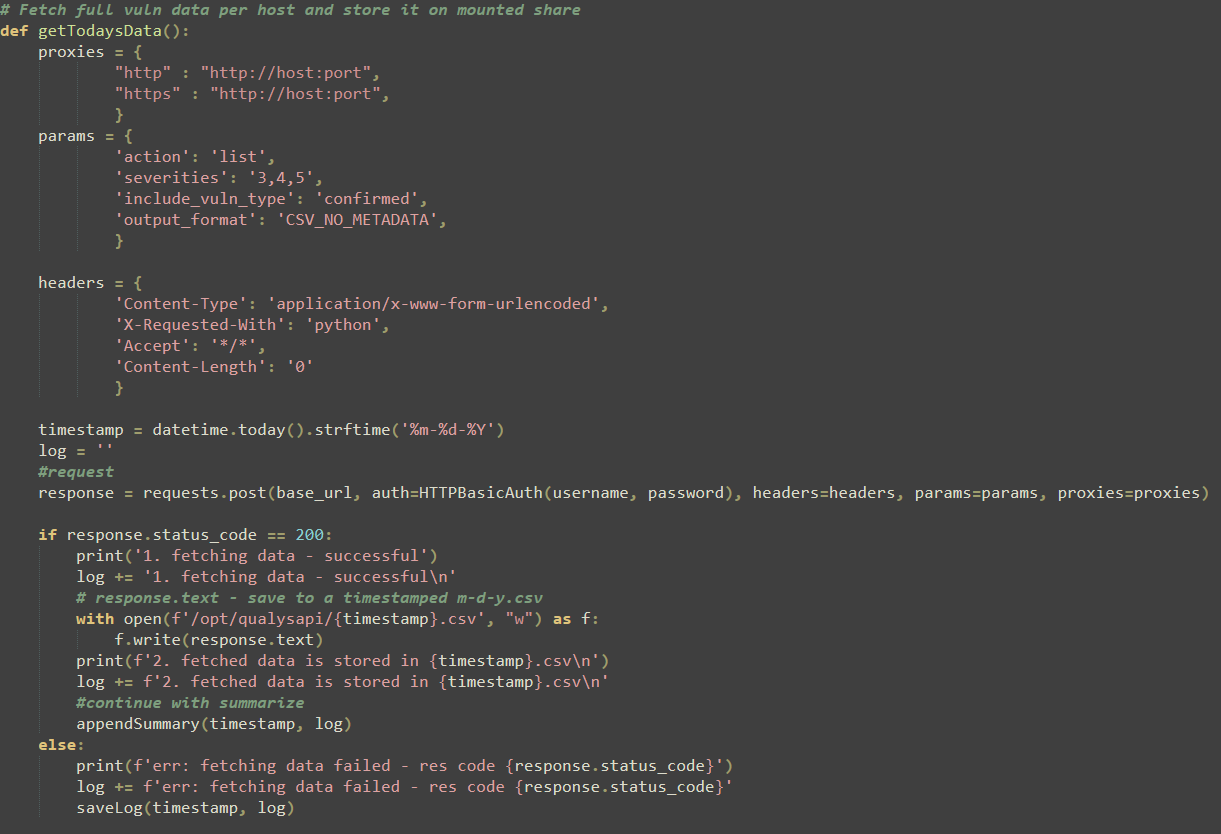

Inside getTodaysData we just prepare request for fetching latest vuln data, send it, save response inside timestamped csv - storing it on mounted share, meanwhile appending to log string for later logging purposes.

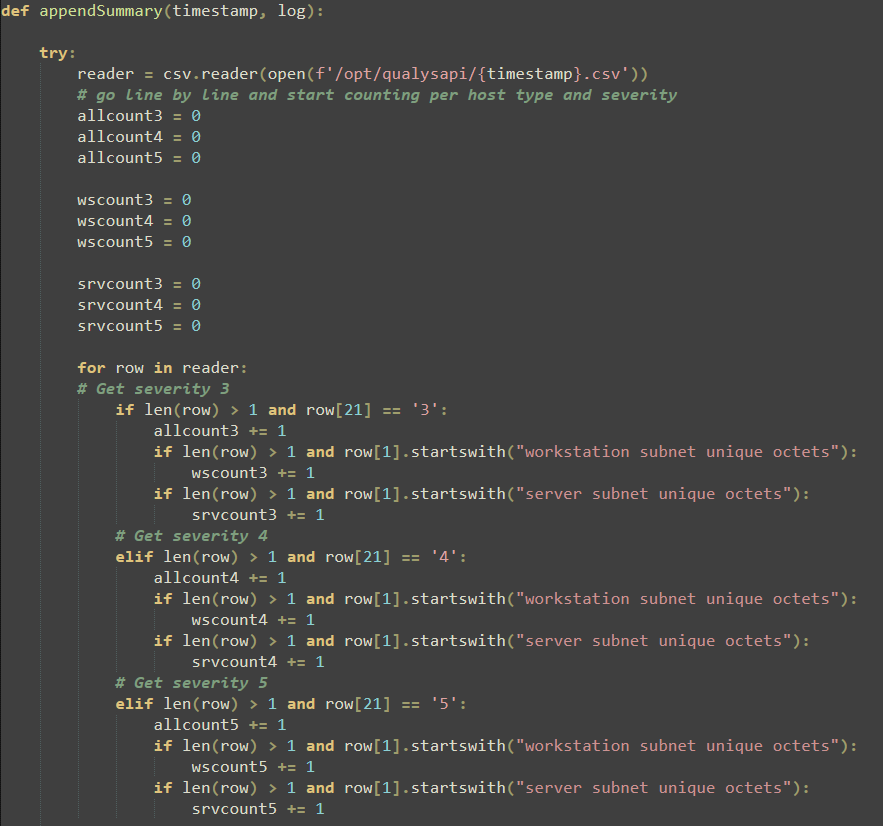

Upon successful response, we call apendSummary, we just count how many vulnerabilities there is per host type and severity.

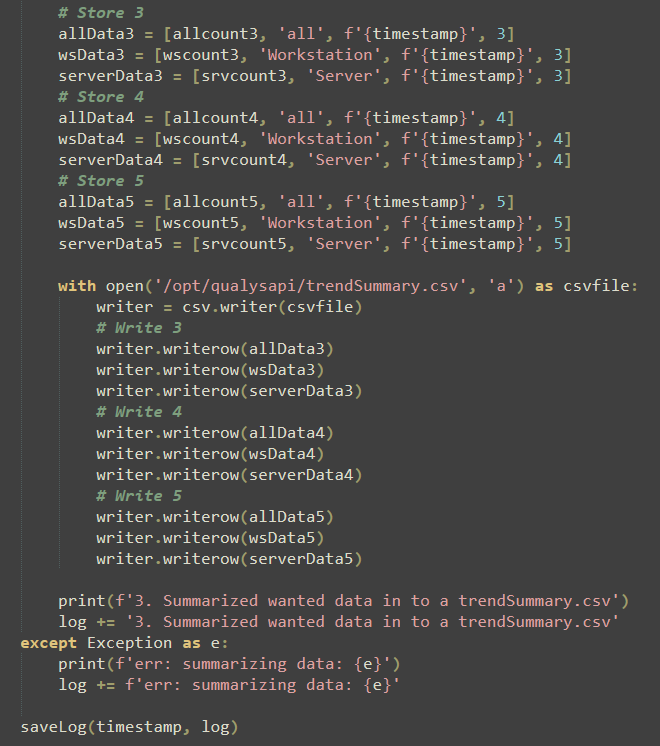

We are preparing data arrays to fulfill csv syntax we are going to draw charts from, which is: [vulnCount,tag,date,severity].

Now just append that data to pre-created trendSummary.csv with first row / column names manually pre-created (vulnCount,tag,date,severity).

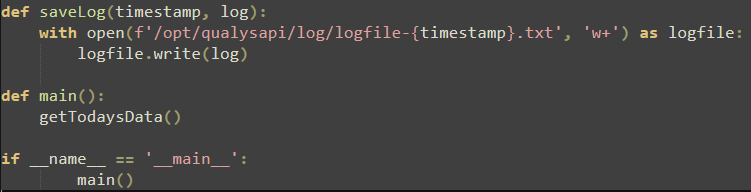

Have a timestamped logs inside log directory so you can make a weekly/monthly watcher that will grep for 'err:' string and notify you that specific export failed.

You will end up with bunch of vulnerability data per every host you have in your qualys instance in case you ever need it, and summarized data for charts/graphs.

Power Bi

Just ping me and I will send you a .pbit (template file) so you can only import your trendSummary and enjoy the graphs.

I haven't forgot..

import requests

from requests.auth import HTTPBasicAuth

from datetime import datetime

import os

import csv

username = 'company-user'

password = os.environ.get('qualysapitoken')

# base_url = if username is in format 'company-user' ? qualysapi.qualys.eu : 'check https://www.qualys.com/platform-identification/'

base_url = 'https://qualysapi.qualys.eu/api/2.0/fo/asset/host/vm/detection/'

# Fetch full vuln data per host and store it on mounted share

def getTodaysData():

proxies = {

"http" : "http://host:port",

"https" : "http://host:port",

}

params = {

'action': 'list',

'severities': '3,4,5',

'include_vuln_type': 'confirmed',

'output_format': 'CSV_NO_METADATA',

}

headers = {

'Content-Type': 'application/x-www-form-urlencoded',

'X-Requested-With': 'python',

'Accept': '*/*',

'Content-Length': '0'

}

timestamp = datetime.today().strftime('%m-%d-%Y')

log = ''

#request

response = requests.post(base_url, auth=HTTPBasicAuth(username, password), headers=headers, params=params, proxies=proxies)

if response.status_code == 200:

print('1. fetching data - successful')

log += '1. fetching data - successful\n'

# response.text - save to a timestamped m-d-y.csv

with open(f'/opt/qualysapi/{timestamp}.csv', "w") as f:

f.write(response.text)

print(f'2. fetched data is stored in {timestamp}.csv\n')

log += f'2. fetched data is stored in {timestamp}.csv\n'

#continue with summarize

appendSummary(timestamp, log)

else:

print(f'err: fetching data failed - res code {response.status_code}')

log += f'err: fetching data failed - res code {response.status_code}'

saveLog(timestamp, log)

def appendSummary(timestamp, log):

try:

reader = csv.reader(open(f'/opt/qualysapi/{timestamp}.csv'))

# go line by line and start counting per host type and severity

allcount3 = 0

allcount4 = 0

allcount5 = 0

wscount3 = 0

wscount4 = 0

wscount5 = 0

srvcount3 = 0

srvcount4 = 0

srvcount5 = 0

for row in reader:

# Get severity 3

if len(row) > 1 and row[21] == '3':

allcount3 += 1

if len(row) > 1 and row[1].startswith("workstation subnet unique octets"):

wscount3 += 1

if len(row) > 1 and row[1].startswith("server subnet unique octets"):

srvcount3 += 1

# Get severity 4

elif len(row) > 1 and row[21] == '4':

allcount4 += 1

if len(row) > 1 and row[1].startswith("workstation subnet unique octets"):

wscount4 += 1

if len(row) > 1 and row[1].startswith("server subnet unique octets"):

srvcount4 += 1

# Get severity 5

elif len(row) > 1 and row[21] == '5':

allcount5 += 1

if len(row) > 1 and row[1].startswith("workstation subnet unique octets"):

wscount5 += 1

if len(row) > 1 and row[1].startswith("server subnet unique octets"):

srvcount5 += 1

# Store 3

allData3 = [allcount3, 'all', f'{timestamp}', 3]

wsData3 = [wscount3, 'Workstation', f'{timestamp}', 3]

serverData3 = [srvcount3, 'Server', f'{timestamp}', 3]

# Store 4

allData4 = [allcount4, 'all', f'{timestamp}', 4]

wsData4 = [wscount4, 'Workstation', f'{timestamp}', 4]

serverData4 = [srvcount4, 'Server', f'{timestamp}', 4]

# Store 5

allData5 = [allcount5, 'all', f'{timestamp}', 5]

wsData5 = [wscount5, 'Workstation', f'{timestamp}', 5]

serverData5 = [srvcount5, 'Server', f'{timestamp}', 5]

with open('/opt/qualysapi/trendSummary.csv', 'a') as csvfile:

writer = csv.writer(csvfile)

# Write 3

writer.writerow(allData3)

writer.writerow(wsData3)

writer.writerow(serverData3)

# Write 4

writer.writerow(allData4)

writer.writerow(wsData4)

writer.writerow(serverData4)

# Write 5

writer.writerow(allData5)

writer.writerow(wsData5)

writer.writerow(serverData5)

print(f'3. Summarized wanted data in to a trendSummary.csv')

log += '3. Summarized wanted data in to a trendSummary.csv'

except Exception as e:

print(f'err: summarizing data: {e}')

log += f'err: summarizing data: {e}'

saveLog(timestamp, log)

def saveLog(timestamp, log):

with open(f'/opt/qualysapi/log/logfile-{timestamp}.txt', 'w+') as logfile:

logfile.write(log)

def main():

getTodaysData()

if __name__ == '__main__':

main()Hopefully, this helps. Stay healthy, stay safe,

Cheers,

bigfella